Direct-To-Chip Liquid Cooling

As the computer chip industry explodes with innovation, data center power density is increasing. Data centre operators are looking to use cutting-edge computer chips (also called microchips or just chips) to perform complex calculations faster than ever. To accomplish this, semiconductor manufacturers are packing more transistors onto silicon wafers, resulting in increased computing power and energy consumption. Released in 1971, Intel’s 4004 chip was a groundbreaking general-purpose chip that shocked the industry by packing 2,300 transistors onto a single microchip. Now, Intel has plans to squeeze one-trillion transistors onto a single chip by 2030. These chips are consuming more electrical power, which translates to greater heat dissipation. Data centre operators are being forced to reconsider conventional cooling strategies to effectively manage heat generated by equipment.

Traditional server rooms have been able to effectively remove heat dissipated from computer chips by swirling conditioned air around a room. As the power density of chips increases, air is becoming a less viable medium for heat removal. Instead, data centre owners and operators are now looking towards circulating a water-based liquid coolant, with a heat capacity superior to air to effectively remove heat from servers.

Liquid-coolant systems generally require a direct-to-chip approach; the solution hinges on circulating a fluid through a cold-plate heat exchanger located directly on the chip. The heat dissipating from the computer chip is absorbed into the coolant loop. The heated fluid is then circulated through a piping network until it reaches a lower-temperature heat exchanger, to reject the heat to. When designing a direct-to-chip loop, one of the first questions will always be “where will this heat be rejected to?”.

This article investigates three different cooling sources to absorb heat being rejected by a direct-to-chip loop.

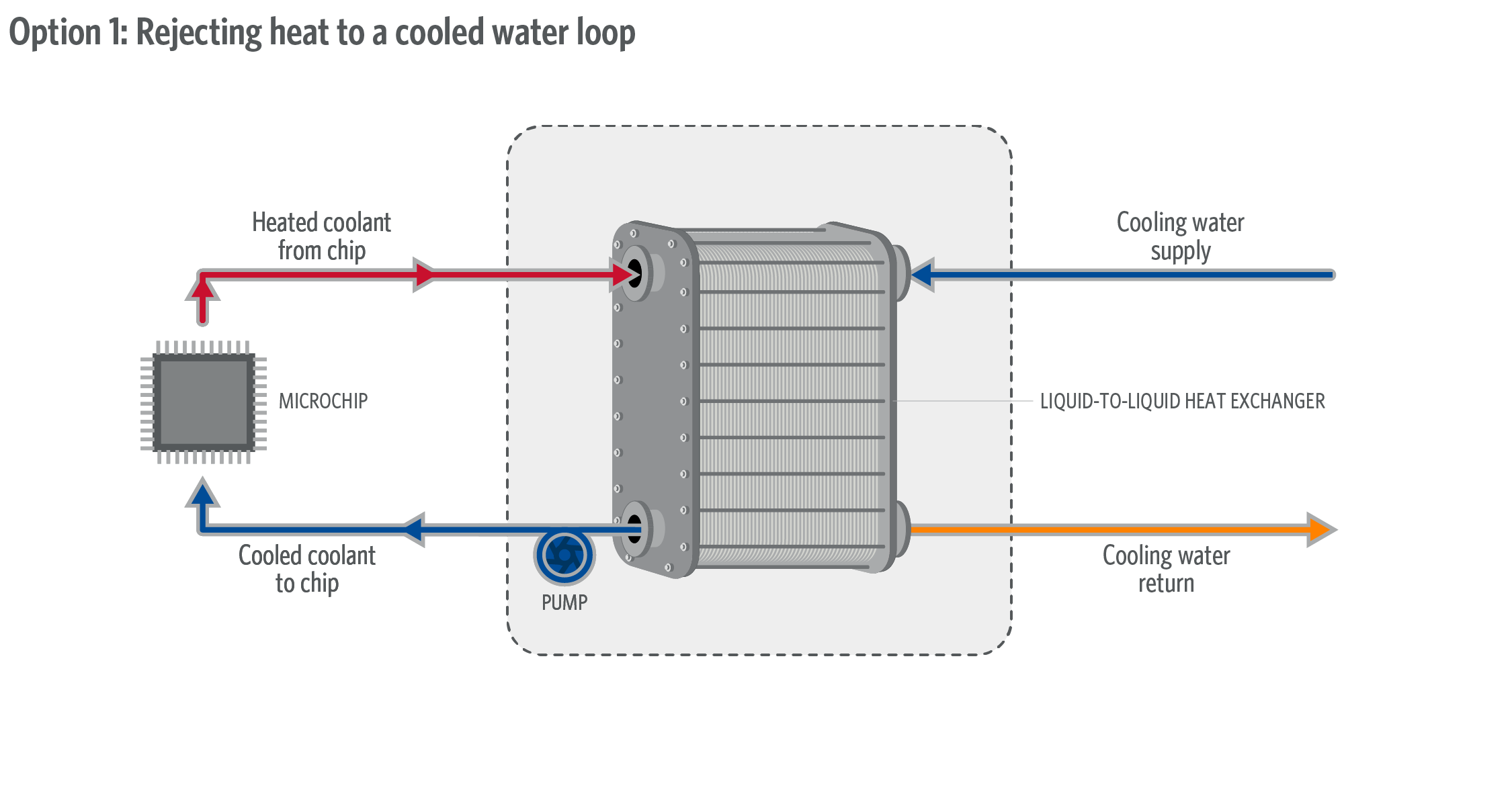

Option 1: Reject Heat to a Cooling Water Loop

A facility’s cooling water loop is generally the preferred sink for heat rejection, (source of cooling), for direct-to-chip applications. This requires a common fluid heat exchanger to transfer heat from the hotter direct-to-chip loop into a colder water loop.

Coolant distribution units, are a commercially available product that serve as the heart of a liquid cooling solution that rejects heat to a separate hydronic loop. CDUs are packaged units that include a heat exchanger to reject heat from a direct to chip loop to the building’s cooling water loop; pumps which circulate the coolant through the direct-to-chip loop, on-board controls, and custom accessories to optimize operation and ease integration.

This strategy can result in serious efficiency gains, as liquid cooling would no longer be just a necessity, but an advantage. Energizing a pump to circulate coolant fluid is generally much more efficient than running a fan to push air through a data hall. When applied at scale, the reduction in electrical usage can be effective and impactful, lowering the total energy consumption and cost of ownership for the data centre.

This solution is generally preferred due to its simplicity, efficiency, and effectiveness. Yet, existing conditions may limit its applicability for retrofits. Primarily, a cooling water loop is required to reject the heat. Server rooms that use refrigerant-based or direct air adiabatic cooling may not have a cooling water loop, which invalidates any potential CDU driven system. Even if a building does have a cooling water loop to reject heat, there are still retrofit concerns. If capped piping isn’t readily available, a larger shutdown may be required to bring the CDU online. Downtime is the enemy of data centre operations. Lastly, these systems require footprint for installation, and the available footprint within the data centre is not always ideal or available.

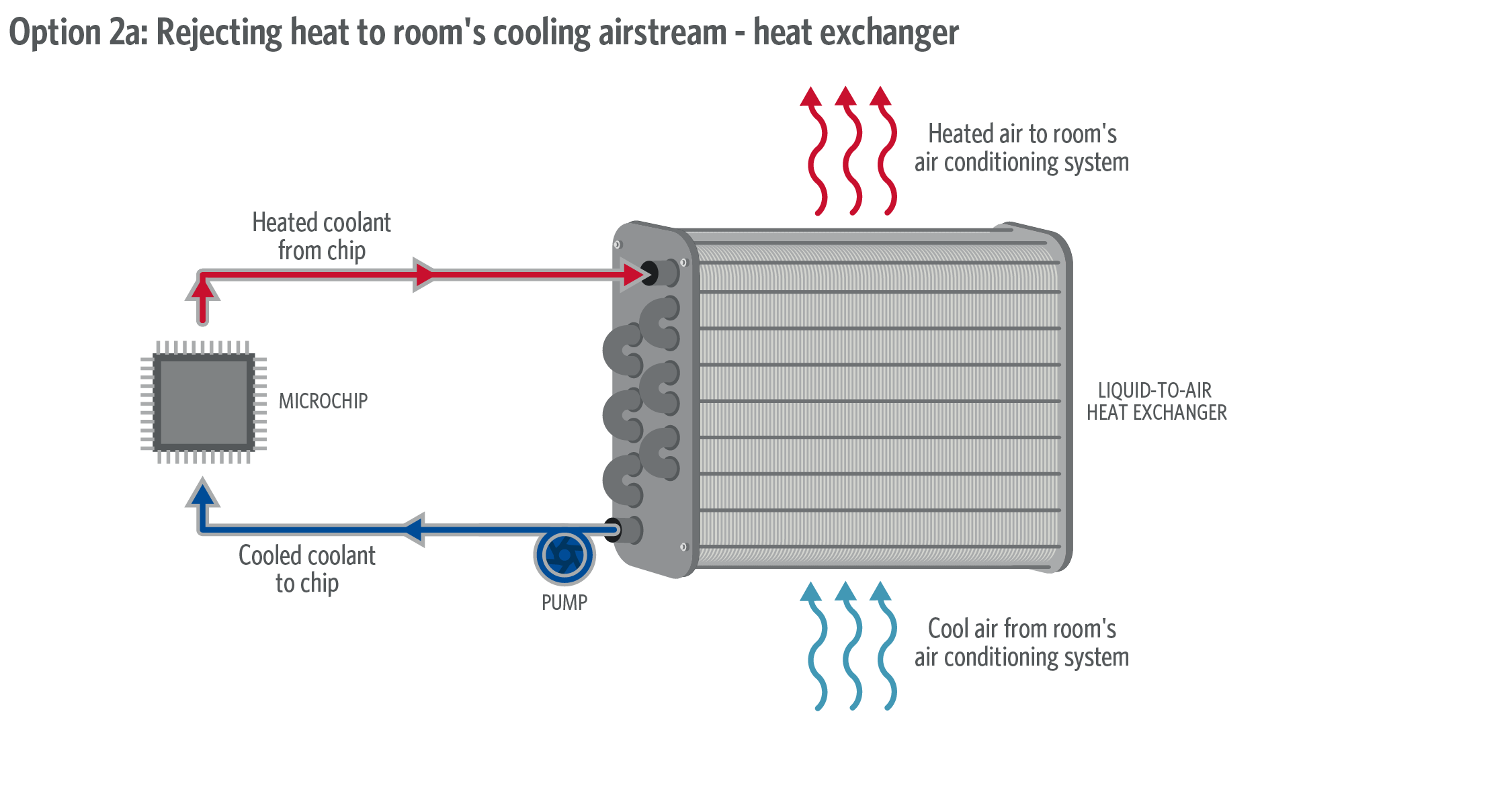

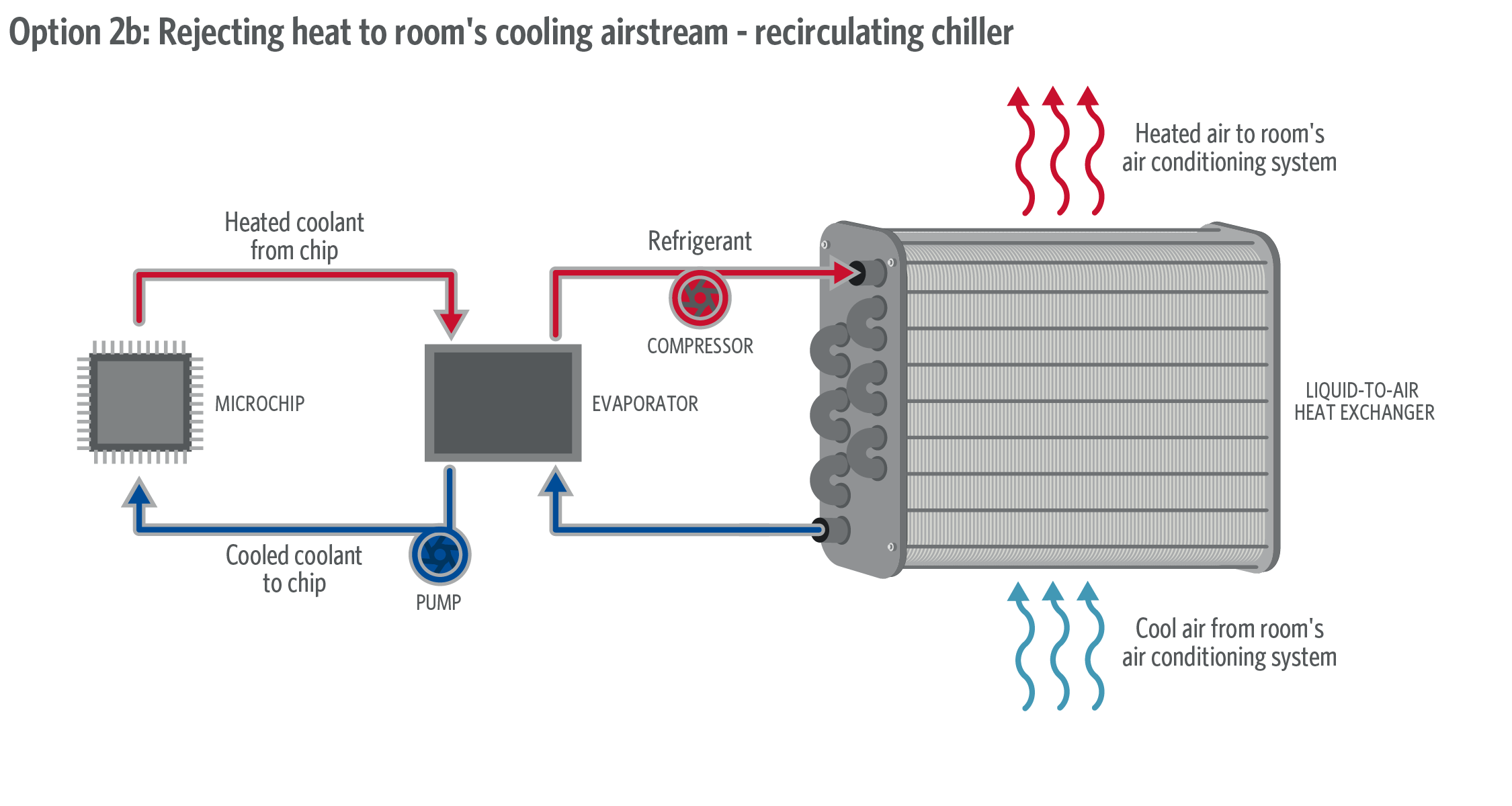

Option 2: Use Liquid Coolant to Reject Heat to the Server Room’s Air Conditioning System

There are two general strategies to facilitate the removal of heat from power-dense computer chips into a server room’s air conditioning system.

The first strategy would be to place a chip-coolant liquid-to-air heat exchanger within the cooling airstream. That way, cooling air circulating across the heat exchanger will pick up the heat that was formerly dissipated by the server. Fundamentally, liquid-cooling is required in the server as the heat dissipating from the chip is too intense for air to remove; the available surface area for heat removal is not large enough for air to be an effective cooling medium. This strategy presents an opportunity to locate a heat exchanger with a larger air-exposed surface to effectively remove heat into the air stream without affecting the chip or hardware design.

This solution is a reasonable one as this enables data centre operators to use high density chips without having to tap into an existing cooling water system. Unfortunately, this solution negates the efficiency gains that are generally associated with direct-to-chip liquid cooling. Instead, the air-cooling systems will continue to operate as designed, and additional energy will be expended to pump the coolant. As a result, the operating cost for the data centre will increase, yet the facility will now be able to use higher density chips.

The second, and more intensive strategy is rooted in necessity. If, for whatever reason, the local airstream is not sufficiently cool to transfer heat from the coolant loop to ambient room temperature, an air-cooled chiller can be located in the server room and use mechanical energy to upgrade the fluid temperatures of a coolant loop in order to facilitate sufficient heat transfer at the ambient room temperature. An air-cooled chiller can operate an energy-intensive vapor-compression cycle to mechanically cool the direct-to-chip loop, rejecting excess heat to the room. The room conditioning system must then process the heat removal again to extract it from the building. Readily available, application-specific chillers are available for purchase from a number of common vendors.

This solution provides the most flexibility, however, is the least efficient. The energization of the chiller, in addition to the pump operation, causes an increase in a data center’s power consumption. Chiller design will require special attention, and the air-cooling load within each server room will increase due to the additional heat dissipated by the chiller’s compressor.

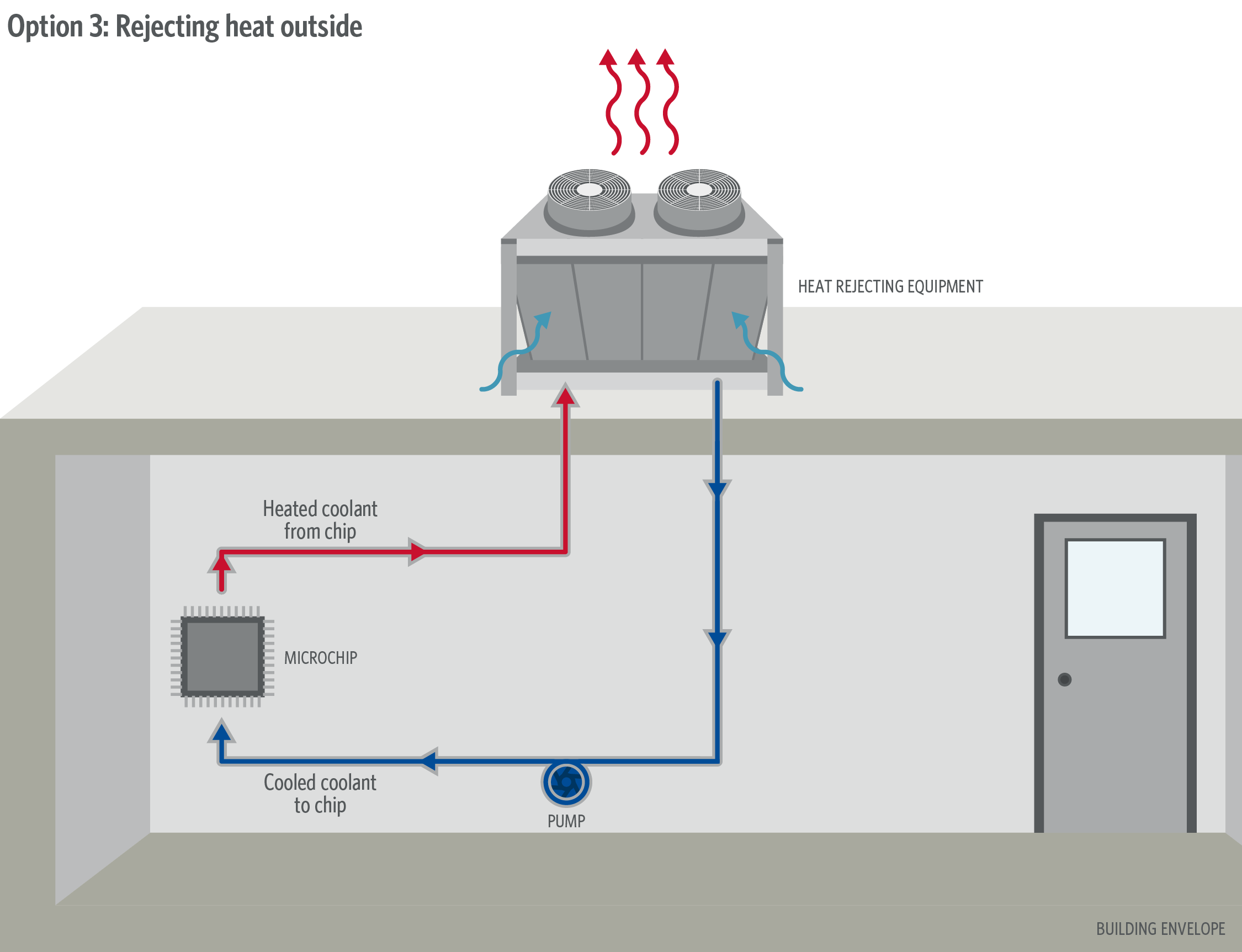

Option 3: Send the Heat Directly Outside

As computer chips are resilient, direct-to-chip cooling loops are able to operate at higher temperatures than other cooling water loops. Supply and return water temperatures in the direct-to-chip loop commonly exceed 100 degrees Fahrenheit (37.8 degrees Celsius). This offers a unique and sustainable solution – reject the heat directly to the ambient air.

Dry cooler and air-cooled chillers can be located outdoors and would be able to take advantage of the high loop temperatures to reject the heat directly to the ambient air, using compressor-less free cooling for a majority of the year. This causes the systems to be much more efficient than general central cooling plants. As an additional benefit, outdoor equipment can be enhanced with evaporative pads or spray nozzles to trade energy usage for evaporation. Water usage in data centres is a sensitive issue, cooler climates with maximum ambient conditions below chip max temperature are preferred yet locations with accessible grey water or a stable water supply may be able to reduce energy usage dramatically.

This solution capitalizes on efficiency. The other proposed solutions require the heat from the data center to be rejected into a building central cooling solution; causing the central cooling plant to have a similar total cooling load. This solution reduces load on the central cooling plant and replaces the cooling load at localized and more efficient points.

However, using outdoor cooling equipment is not the right solution for every data centre. Installing outdoor equipment, pumps, and the piping necessary to facilitate this solution can be unfavourable for retrofitting existing data centres as it would cause significant downtime and capital expenditure.

Next Steps

As data centre clients seek to implement cutting-edge solutions, collaborating with operators, the industry should embrace the latest and most powerful computer chips. Traditional air-cooling systems are no longer able to sufficiently remove the heat from server racks, and data centre clients and operators must look to liquid-cooling solutions. The design of such systems must be carefully considered by all stakeholders to optimize system effectiveness, efficiency, maintainability and reliability while minimizing downtime, risk and cost. Each application is unique, yet the method of heat rejection can be tailored to specifically meet the needs of each data hall and upgrade IT infrastructure to support the new generation of computing.

Sources For Transistor Counts

- Intel 4004 transistor count: The Story of the Intel® 4004

- Intel planning to pack one trillion transistors onto a chip by 2030: Claimed by Intel during the IEEE International Electron Devices Meeting or IEDM)